As mentioned in a previous post, we started building Gimme Bar a little over a year ago. We did a lot of things right, but we also did some things wrong.

Since—in those early days—I was the only developer, and since most of my professional development experience is in PHP, that choice was obvious. I also started building the API before the front-end. I chose a really simple routing model for the back-end, and got to work, sans framework. Our back-end code is still really lean (for the most part), and I'm still (mostly (-: ) proud of it.

When it came time to select a datastore, I chose something a bit more risky, with Cameron's blessing.

Having just spent the best part of a year and a half working with PostgreSQL at OmniTI, I felt it was time to try something new. We knew this carried risks, but the timing was good, and—quite frankly—I was simply bored of hacking on stored procedures in PL/pgSQL. We wanted something that could be expected to scale (eventually, when we need it), without deep in-house expertise, but also something that I'd find fun to work on. I love learning new things, so we thought we'd give one of the NoSQL solutions a whirl.

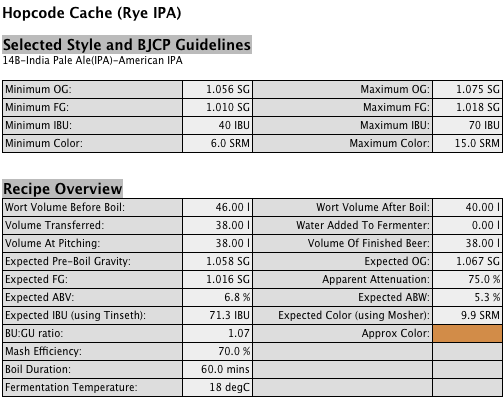

In those days (January 2010), the main NoSQL contenders for building a web application were—at least in our minds—CouchDB and MongoDB. Also in those days, MongoDB didn't quite feel like it was ready for us. I could, of course, be wrong, but I figured that since I didn't know either of these systems very well, the best way to find out was to just pick one and go with it. The thing that ultimately pushed us to the CouchDB camp was a mild professional relationship with some of the CouchDB folks. So, we built the first versions of Gimme Bar on top of Linux, Apache, PHP 5.3, Lithium (on the front-end), jQuery and CouchDB.

By summer 2010, we began work on adding social features (which have since been hidden) to Gimme Bar, and CouchDB started giving us trouble. This wasn't CouchDB's fault, really. It was more of an architectural problem. We were trying to solve a relational problem with a database that by-design knew nothing about how to handle relationships.

Now might be a good time to explain document-independence and map/reduce, but I fear that would take up more attention than you've kindly offered to this article, and it's going to be long even without a detailed tutorial. Here's the short version: CouchDB stores structured objects as (JSON) documents. These documents don't know anything about their peers. To "query" (for lack of a better term) Couch, you need to write a map function (in JavaScript or Erlang, by default) that is passed all documents in the database and emits keys and values to an index that matches your map's criteria. These keys can be (roughly) sorted, and to "query" your documents, you jump to a specific part of this sorted index and grab one or more documents in the sequence. From what I understand of map/reduce (and my only practical experience so far is with CouchDB), this is how other systems such as Hadoop work, too.

There is tremendous value to a system like this. Once the index is generated, it can be incrementally updated, and querying a huge dataset is fast and efficient. The reduce side of map/reduce (we had barely a handful of reduce functions) is also incredibly powerful for calculating aggregates of the map data, but it's also intentionally limited to small subsets of the mapped data. These intentional limits allow map/reduce functions to be highly parallelizable. To run a map on 100 servers, the dataset can be split into 100 pieces, and each server can process its individual chunk safely and in parallel.

This power and flexibility has an architectural cost. Over a decade of professional development with various relational databases taught me that in order to keep one's schema descriptive and robust, one must always (for small values of "always") keep data normalized until a performance problem forces denormalization. With a document-oriented datastore like CouchDB or MongoDB, denormalization is part of the design.

A while ago, I made an extremely stripped-down example of how something like user relationships are handled in Gimme Bar with CouchDB. This document is for the user named "aaron" (_id: c988a29740241c7d20fc7974be05ec54). Aaron is following bob (_id: c988a29740241c7d20fc7974be05f67d), chris (_id: c988a29740241c7d20fc7974be05ff71), and dale (_id: c988a29740241c7d20fc7974be060bb4). You can see the references to the "following" users in aaron's document. I also published example maps of how someone might go about querying this (small) set.

The specific problem that we ran into with CouchDB is that our "timeline" page showed the collected assets of users that the currently-logged-in user is following. So, aaron would see assets that belong to bob, chris and dale. This, in itself, isn't terribly difficult; we just needed to query once for each of aaron's follows. The problem was further complicated when a requirement was raised to not only see the above, but also to collapse duplicates into one displayed asset (if bob and chris collected the same asset, aaron would only see it once). Oh, and also, these assets needed to be sorted by their capture time. These requirements made the chain of documents extremely complicated to query. In a relational system, a few (admittedly expensive) joins would have taken care of it in short order.

I spent a lot of time fighting with CouchDB to solve this problem. I asked in the #couchdb channel on Freenode, posted to the mailing list and even resorted to StackOverflow (a couple times) before coming up with a "solution." I put the word "solution" in quotes there because what I was told to do only partially solved our problem.

The general concensus was that we should denormalize our follow/following + asset records in an extreme way (as you can see in the StackOverflow posts, above). I ended up creating an interim index of all of a user's followers/following links, plus an index of all of the media hashes (what we use to uniquely identify assets, even when captured by different users). Those documents got pretty big pretty quickly (even though we had less than 100 users at the time). Here's an example: Cameron's FollowersIndex document.

As you might guess, even a system designed to handle large documents like this (such as CouchDB) would have a hard time with the sheer size. Every time an asset was captured, it would get injected into the FollowersIndex documents, which caused a reindex… which used up a lot of RAM, and caused bottlenecks. Severe bottlenecks. Our 8GB of RAM was easily exhausted by our JavaScript map function. Think about that. 8GB… for <100 users. This was not going to survive. Turns out we were exhausting Erlang's memory allocator and actually crashing CouchDB. From userspace. I asked around, and the proposed solution to this problem-within-a-problem was to re-write the JavaScript map as Erlang to avoid the JSON conversion overhead. At this point, I was desperate. I had Evan (who is a valuable member of the team, and is a far superior computer scientist to me) translate the JS to Erlang. What he came up with made my head hurt, but it worked. And by "worked," I mean that it didn't crash CouchDB and send it into a recovery spiral (crash, respawn, reindex, crash, repeat)… but it did work. Enough to get us by for a few weeks, and that's what we did: get by. The index regeneration for the friends feed was so slow that I had to use delayed indexes and reindex in cron every minute. CouchDB was using most of our computing resources, and we knew we couldn't sustain any growth with this system.

At this point, we decided to cut our losses, and I went to investigate other options, including MySQL and MongoDB. My next blog post will be on why I think MongoDB is a superior solution for building web applications, despite CouchDB being better in certain areas.